Advisory Boards

County Advisory Boards

| Advisory Board | Extension Liaison |

|

Fairfield County Agricultural Extension Council |

Kowalski, Jacqueline |

|

Hartford County Extension Council |

Cushman, Jen |

|

Litchfield County Extension Council |

Meinert, Richard |

|

Middlesex County Extension Council |

Worthley, Tom |

|

New Haven County Extension Resource Council |

Griffiths-Smith, Faye |

|

New London County Agricultural Extension Council |

Welch, Mary Ellen |

|

Tolland County Extension Council |

Champagne, Frances Pacyna |

|

Windham County Extension Council |

Kegler, Bonnie |

Program Advisory Boards

| Advisory Board | Extension Liaison |

|

Climate Smart Microgrants Investment Committee |

Martin, Jiff |

|

Fairfield County 4-H Development Committee |

Picard, Emily |

|

Fairfield County 4-H Fair Board |

Picard, Emily |

|

Goat Committee |

Cushman, Jen |

|

Hartford County 4-H Advisory Commitee

|

Cushman, Jen |

|

Hartford County 4-H Fair Association |

Cushman, Jen |

|

Invasive Plant Advisory Board |

Wallace, Victoria |

|

Venture Farming Institute Steering Committee |

Martin, Jiff |

Data Requirements

Making Changes to Advisory Board Membership

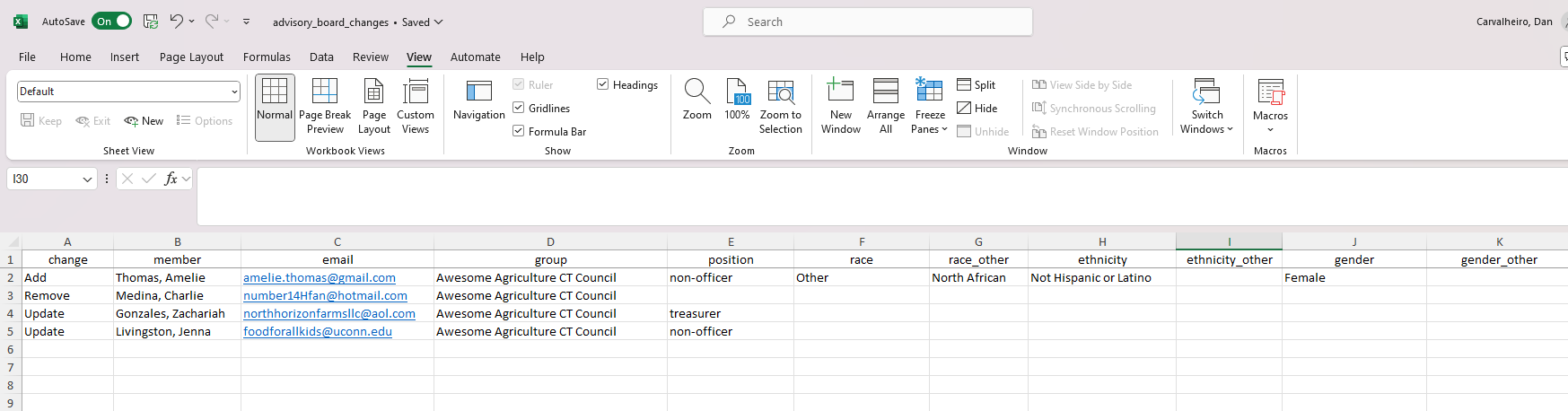

Advisory boards can often be undergoing changes to membership and management. Any changes made to the advisory board requires changes to the database on the advisory boards. Therefore, it is expected that Extension liaisons report any advisory board changes to the Extension Data Analyst within a day or two. When reporting changes, the Extension liaison should email the Data Analyst using the spreadsheet template below.

advisory board changes template

The first column in the spreadsheet is used to indicate which kind of change is made to the advisory board, with three options available:

- Add - indicates a new member is being added to the advisory board.

- Remove - indicates a current member is being removed from the advisory board.

- Update - indicates that the information in one or more columns is being changed for a current member.

Below is an example of changes that could be sent to the Data Analyst.

As can be seen from the above example, a submission to the Data Analyst can include multiple changes, with a row for each specific change. Additionally:

- An "Add" should include the members' name (last, first), email, board name, position in the board (if applicable), and their REG data. REG data should be collected immediately as a new member is added to the board as it is part of the registration process. For information on how to collect REG data, see the "Race, Ethnicity, and Gender Data (REG)" section below.

- A "Remove" should include the name, email, and group of the member. This is to avoid accidentally removing information for another board member with the same name. Any other information for the specified member will also be removed.

- An "Update" should include the name, email, and group of the member. This is to avoid accidentally removing information for another board member with the same name. Additional columns should be filled out only for column(s) with entries being changed to the new value in the additional column(s).

- Since the group has to be specified, an Extension member can include changes for more than one board, if applicable.

Other changes made to advisory boards should also be reported to the Data Analyst, which include creating a new advisory board, discontinuing an advisory board, or a change to a new Extension liaison. For these changes, simply email the Data Analyst with this information so they can document these changes in the advisory board database. A template is not required for submitting these changes.

FAQ

Access to the advisory board database is limited to the Extension Data Analyst and Extension administration. Since the database includes information on advisory board members' REG data, in an effort towards maintaining the members' privacy and confidentiality in regard to the REG data, access to this data should be given only to those who require this information for their work. For Extension liaisons that need access to this data for their advisory board, they can email the Data Analyst to receive a copy of only their board's data.

Race, Ethnicity, and Gender Data (REG)

- A fixed instructor

- Fixed participants

- Meets for 5+ classes

- Utilizes an established Extension curriculum

Collecting REG Data

All REG data should be self-reported by participants with individual survey forms, ensuring participant confidentiality. The survey should preface any REG related survey questions with the language in one of the following documents, which informs participants of why we collect this data and encourage them to answer the questions at their comfort level.

- REG data collection language for educational program participants

- REG data collection language for advisory board members

Following this language, the survey forms should have a separate item for each construct. NIFA requires collecting data and reporting on specific categories for each of the REG constructs.

Race

An assessment for participant race is required to include the following categories, with the option for participants to select more than one:

- American Indian or Alaska Native

- Asian

- Black or African American

- Native Hawaiian or Other Pacific Islander

- White

Ethnicity

An assessment for participant ethnicity is required to include the following categories:

- Hispanic or Latino

- Not Hispanic or Latino

Gender

An assessment for participant gender is required to include the following categories:

- Female

- Male

Reporting REG Data

NIFA requires reporting the prevalence of specific categories for each of the REG constructs.

Race

- American Indian or Alaska Native

- Asian

- Black or African American

- Native Hawaiian or Other Pacific Islander

- White

- Other/Unidentified for those that did not respond to the item or selected an 'Other' category

- and for participants that selected more than one category, either:

- a single Multiple-Race category

- all applicable combinations of groups selected by participants (e.g. Asian/White)

Ethnicity

- Hispanic or Latino

- Not Hispanic or Latino

- Other/Unidentified for those that did not respond to the item or selected an 'Other' category

Gender

- Female

- Male

- Other/Unidentified for those that did not respond to the item or selected an 'Other' category

Assessing Parity

NIFA parity assessments expect that program participant and advisory board member demographic rates should be equal to or greater than 80% of state demographic rates. Based on state and county demographic rates from the 2020 Decennial Census, parity benchmarks should be as follows:

Race

| State/County | American Indian or Alaska Native | Asian | Black or African American | Native Hawaiian & Other Pacific Islander | White | Two or More Races | Other Race |

| Connecticut | 0.36% | 3.83% | 8.62% | 0.04% | 53.14% | 7.39% | 6.64% |

| Fairfield | 0.37% | 4.29% | 8.92% | 0.03% | 48.81% | 8.59% | 8.99% |

| Hartford | 0.28% | 4.79% | 11.31% | 0.03% | 49.29% | 7.15% | 7.15% |

| Litchfield | 0.22% | 1.51% | 1.45% | 0.03% | 68.59% | 5.62% | 2.59% |

| Middlesex | 0.19% | 2.43% | 4.17% | 0.02% | 65.62% | 5.53% | 2.04% |

| New Haven | 0.38% | 3.47% | 11.00% | 0.04% | 50.31% | 7.62% | 7.18% |

| New London | 0.74% | 3.22% | 4.77% | 0.08% | 60.04% | 7.21% | 3.94% |

| Tolland | 0.15% | 4.52% | 2.91% | 0.03% | 65.62% | 4.92% | 1.84% |

| Windham | 0.56% | 1.37% | 1.65% | 0.02% | 65.30% | 6.56% | 4.54% |

Ethnicity

| State/County | Hispanic or Latino | Not Hispanic or Latino |

| Connecticut | 16.72% | 66.17% |

| Fairfield | 21.84% | 62.84% |

| Hartford | 18.14% | 65.21% |

| Litchfield | 6.84% | 73.70% |

| Middlesex | 6.26% | 74.19% |

| New Haven | 19.58% | 64.27% |

| New London | 10.41% | 70.79% |

| Tolland | 5.54% | 74.82% |

| Windham | 11.34% | 70.06% |

Gender

| State/County | Female | Male |

| Connecticut | 41.18% | 38.82% |

| Fairfield | 41.21% | 38.79% |

| Hartford | 41.37% | 38.63% |

| Litchfield | 40.53% | 39.47% |

| Middlesex | 41.05% | 38.95% |

| New Haven | 41.58% | 38.42% |

| New London | 40.49% | 39.51% |

| Tolland | 40.22% | 39.78% |

| Windham | 40.48% | 39.52% |

It is important to note that in the case where a program's target population does not reflect overall state or county demographic rates, there are alternative parity benchmarks that can be used. See the FAQ for more information.

FAQ

There is a possibility that I may have an undocumented individual participate in my program. How can I collect their REG data if they do not feel comfortable reporting any information about themselves?

Participants are not required to report their REG data, but we are required to give them the opportunity to report that data. If you offer them the opportunity and they decline to report their data, then we can report them as 'Other/Unidentified'. But before it gets to that point, there are several strategies we can use to increase the chance of them reporting their data. First, it can help to explain to them the reasons why we collect the data and that we take great care in maintaining their privacy and confidentiality. Secondly, we can have them fill out a survey form that does not have their name on it if that helps them feel more comfortable reporting their REG data; in that case you can give their survey form a unique identifier so they can maintain their anonymity while keeping any other information about them on a separate document with the same identifier.

I may have participants that disagree with or are confused by the REG terms and their definitions. Can I exclude those terms from the questions on the survey form?

Yes you can have each REG question ask them to select all that apply among the options available for each question. Alternatively, you could explain that we are using the REG terms as defined by the U.S. government for the purpose of collecting REG data.

My program does not meet parity according to the benchmarks. Does that mean I am doing a bad job?

There are many reasons why your specific program might not meet parity. For example, you may have a really small number of participants, you may have just recently started in your position, certain groups may not be available to you, etc... We take such factors into account when reflecting on the results of our parity analysis. As for NIFA, they ask for parity across four program areas, so they are not concerned whether individual programs are meeting parity. But a lack of parity can also point towards areas for improvement or that aspects of the program may need to be reconsidered.

The target population for my program is very niche and its demographic make-up is not accurately represented by the census data? What can I do?

The census results provide estimates of different racial, ethnic, and gender identities across the state and by county, for individuals of all ages and professions. It is possible that your target population may by radically different demographically in comparison to the overall state or county demographic profile. In such cases, NIFA allows us to use alternative parity benchmarks that more accurately reflect the target population. But we need to ensure that the parity benchmarks are based on a reputable source or a rigorous census carried out by us. If you think this situation applies to you, please reach out to both the Associate Dean and Data Analyst so that we can determine the best solution.

Statewide Indicators

- Adaptation and resilience to a changing climate

- Enhancing health and well-being

- Sustainable agriculture and food supply

- Sustainable landscapes across urban-rural interfaces

Development, Implementation, and History

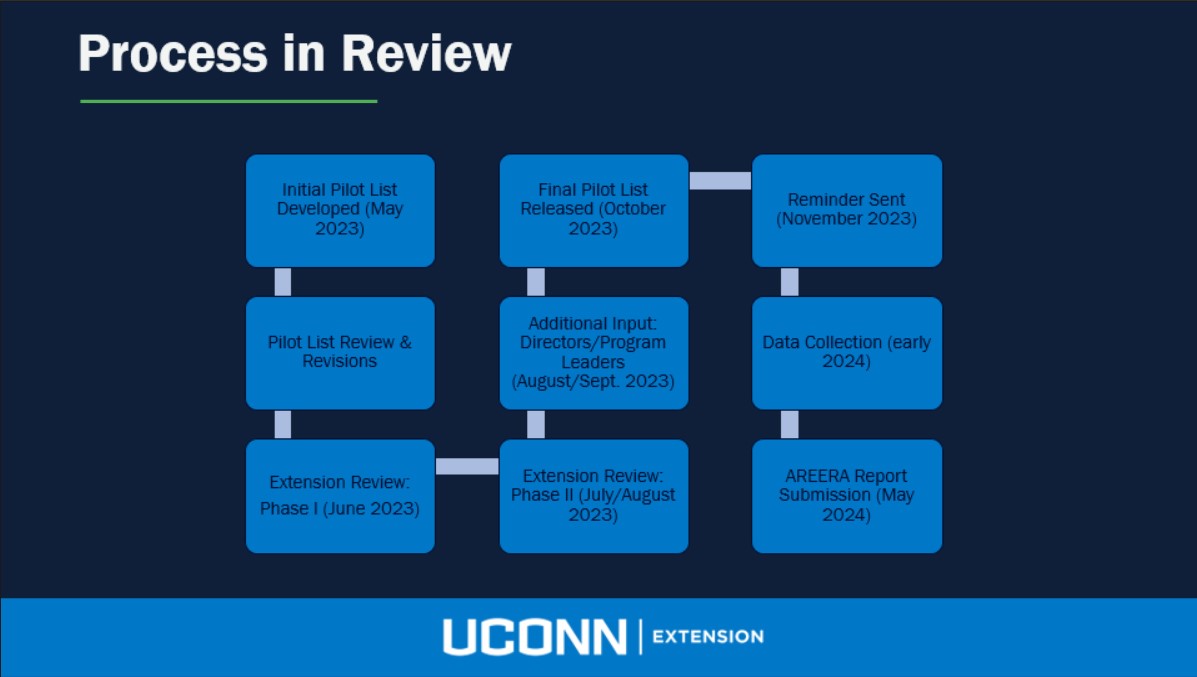

Our indicators were initially based off a set of indicators being used by UF/IFAS Extension. This initial list then went through a multi-stage feedback and revision process, which is outlined in the image below:

In summer/fall 2024, Extension's Data Analyst and Evaluation Specialist will meet with Extension professionals to receive feedback on the indicators, which may lead to changes in the indicators for the 2025 reporting cycle.

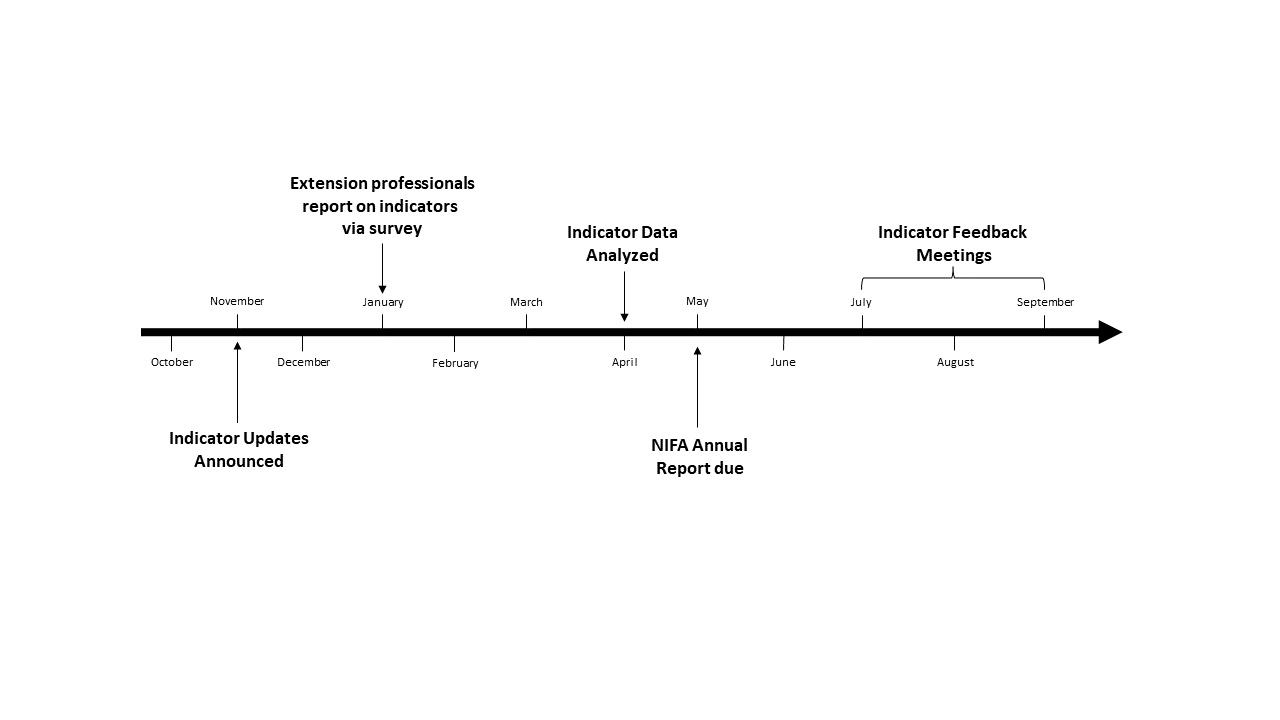

Reporting and Revisions Timeline

This timeline shows all the steps involved in the reporting and revisions of the statewide indicators. Extension professionals are directly involved in the Indicator Feedback Meetings and Reporting on the Indicators Survey. Whereas the other steps are the responsibility of the Extension Evaluation Specialist and Data Analyst.

Data Collection and Management

UConn Extension does not have requirements for how Extension professionals store and manage their indicator data during the year- only that reported indicator data meet specific requirements when submitting the data to the indicator survey. Here are some recommendations that are intended to make the process more straightforward and easier:

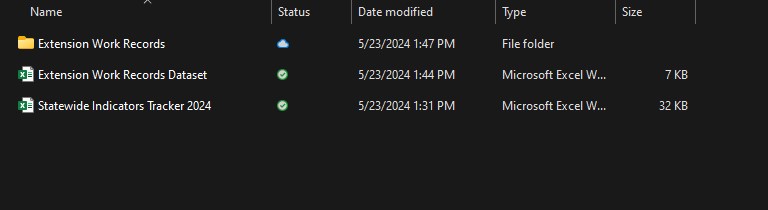

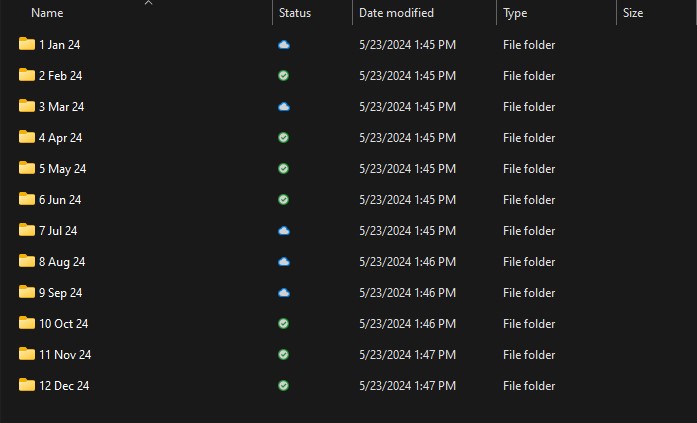

Data Archive

It is recommended that you keep an archive of your past Extension work. This is helpful because it provides an organized way of storing one's records which can increase data quality (e.g., accuracy, contextual information) and ease of access in the future (e.g., summarizing past work, seeing past work with same client, etc.). Such an archive should be hosted on a cloud-service to ensure there is a back-up and the ability to access the archive through multiple devices. UConn provides access to OneDrive and SharePoint as options. On a cloud-service, one should create a dedicated folder to serve as their data archive, which includes a folder for storing direct evidence of their work, a dataset that lists their work with relevant information, and a dataset that summarizes their work in relation to the statewide indicators. Here is an example of such a folder:

Extension Work Records

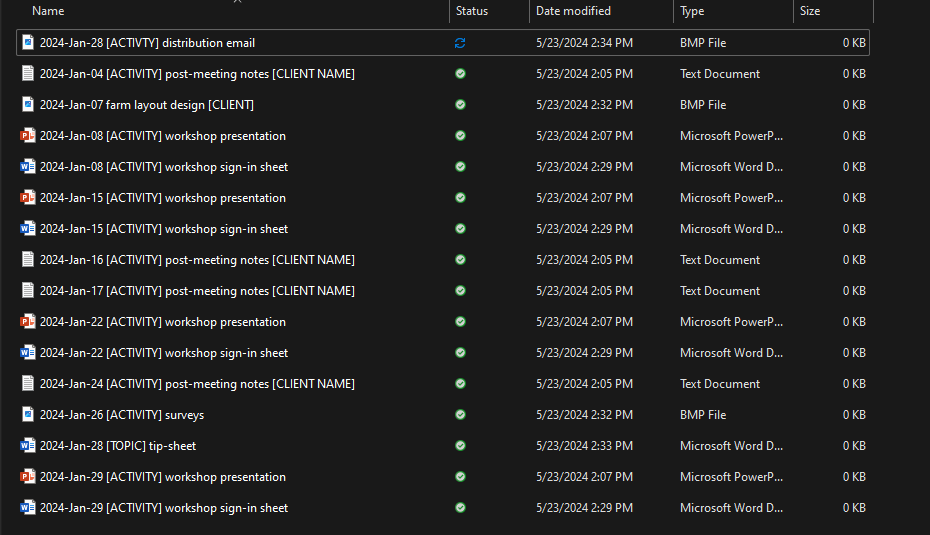

This folder should serve to store all direct evidence of one's Extension work. This is important in the event that someone ever needs to provide evidence for auditing purposes or needs access to that information for future work. Direct evidence of work can include a large variety of files, like emails, receipts, meeting recordings, post-meeting notes, participant surveys, sign-in sheets, and more. And depending on one's work, they may accumulate many files that can lead to a disorganized folder. So it is recommended that this folder has a series of sub-folders that are used to organize all the files, like according to month of the year.

And in each of the sub-folders, all relevant files should be listed with detailed titles for easy identification. This way, one could easily determine the contents of the file and quickly identify which file they may need at a given time.

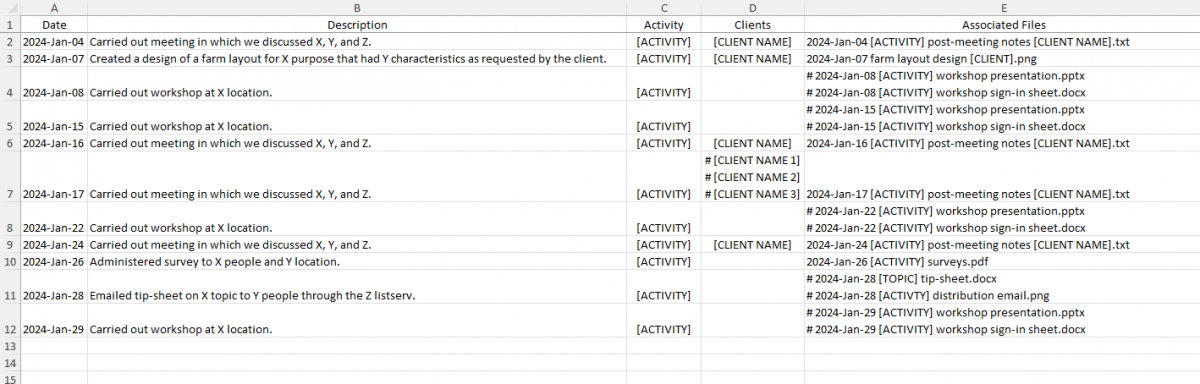

Extension Work Records Dataset

In the the data archives folder should also be an excel spreadsheet which summarizes all the work evidence one has stored in the sub-folders of the Extension Work Records folder. This spreadsheet can include the date of the work (for contextual information & sorting), a description of the work, additional relevant information (activity, client), and a list of the files associated with the work. Therefore, if a funder requested evidence of a funded work carried out several months prior, using this spreadsheet one could easily identify the day the activity was carried out, whom received the work/benefit, and which files (of many) in the archive contain additional information about the work (if needed).

Statewide Indicators Dataset

Since the above Extension Work Records Dataset contains information on work applicable to the statewide indicators, one could read through the whole dataset to aggregate their data, across the whole previous year, when reporting on the statewide indicators survey. However, it may be easier and more manageable if one were to aggregate this information occasionally throughout the year. To help with this, we created a Statewide Indicators Tracker that allows one to enter the relevant data for each indicator across each month of the year, which then aggregates the data for the whole year in a separate tab. Additionally, one can change whether an indicator is applicable to them or not (by changing the 'Applicable' value to No and activitate the filter button on the header cell), which will then have the spreadsheet list only the indicators applicable to their work. Then, when it is time to report on the indicators survey, one can easily just copy the aggregated values for the relevant indicators from the aggregate tab and enter the values into the survey.

![]()

This serves as the current recommendation for collecting and managing data for the statewide indicators. Of course, this recommendation still requires work and time from the Extension professional. But we will continue to pursue additional methods and platforms in the future to reduce Extension professionals' time towards record-keeping while ensuring data quality and ease of access.

NIFA Report

The statewide indicators data are analyzed and summarized for NIFA's Annual Report each year. This data was first submitted in the NIFA Annual Report for Fiscal Year 2023. Specifically, the indicator results were summed across all Extension professionals, showcasing Extension's total efforts towards work described by each indicator. Several indicators were then omitted based on non-response or very low impact/relevance. The indicators were then grouped and presented in the report based on underlying themes identified through the results, which are listed under each critical issue in the report.

Changes and Updates to the Indicators

Each year, Extension revises the indicators based on 1) results from the most recent analysis of the indicators, 2) feedback from Extension professionals, and 3) any changes to the Extension goals and program areas. In summer/fall, Extension's Data Analyst and Evaluation Specialist hold meetings with professionals in the following groups and Extension work areas:

- 4-H

- CLEAR

- Climate Adaptation & Resilience

- Health & Well-being

- Food Systems & Agriculture

- IPM

- Sustainable Landscapes

In these meetings, the Evaluation Specialist describes the current state of the indicators, related principles of evaluation, and ideas for updates to the indicators. This is an opportunity for Extension professionals to discuss their perspectives and ideas. Following the meeting, the Data Analyst sends survey invitations to the Extension professionals for final feedback after they have had a chance to reflect on what was discussed in the meeting. The feedback is then aggregated and considered when making any changes to the indicators for the upcoming reporting cycle.

FAQ

Am I required to complete the indicators survey and if so, why?

UConn Extension is required to report to NIFA on work funded by Smith-Lever funds. Many Extension professionals' salaries and work are funded through Smith-Lever funds. We send out the indicators survey to those that receive Smith-Lever funds so that we can meet this requirement. Additionally, the indicators help showcase the work of individual Extension professionals which can be used towards promoting one's professional identity and scholarship.

When I try to access the indicators survey, it directs to a blank page with the text saying I already submitted it.

This is an issue with Qualtrics that can occur for several reasons. One common reason is that you may have accessed the survey at a previous time and accidentally submitted it while looking through all the indicators. Another common reason is that survey invitation links are personalized to each invitee, so sharing a link with someone may lead to them completing your survey instead of theirs. If someone runs into this issue, they should reach out to the Data Analyst so they can receive a new survey link.

I work with a colleague(s) on the same projects. How do we avoid duplicate data when reporting on the indicators?

When completing the indicators survey, one should enter data for all their direct work. There is currently not an ideal solution for managing situations in which Extension professionals are reporting on their shared work. We disclose this limitation when reporting the indicator results to NIFA. Additionally, while we aggregate the data to showcase the overall impact of Extension, we want each individual's data to showcase their impact even if a colleague is also reporting that same data.

What qualifies as documentation for evidence used in reporting for the indicators?

There are many potential forms of evidence depending on the type of work being carried out, including emails, post-meeting notes, recordings, receipts, surveys, etc. But generally, there should be an effort to have some reliable evidence for past work.

What is the difference between "reported" and "demonstrated" in the indicators?

For each indicator describing an impact, "reported" refers to an individual's self-report of the impact described by the indicator, whereas "demonstrated" refers to an individual showing the impact or providing observable evidence of the impact.

How should I respond to an indicator that is not applicable to my work?

One can simply report a zero or leave their response to the indicator blank.

I have other work that I carry out that does not fit with the current list of indicators. How do I add them to the indicators?

The purpose of the indicators is to describe the impacts of work shared by Extension professionals. In some cases, someone may be the only one carrying out that specific type of work. Therefore, it is not something that would typically be included as an indicator for NIFA reporting purposes. However, everyone is encouraged to create their own indicators for such work, which can help showcase their efforts and scholarship. In such cases, they can reach out to 1) the Data Analyst for help with recording and reporting that data and 2) the Evaluation Specialist for ideas of how to create indicators for that specific work. Additionally, even though it is not currently in the indicator list, it may be worthwhile adding in the future as additional professionals join Extension and/or other Extension professionals adopt similar work.